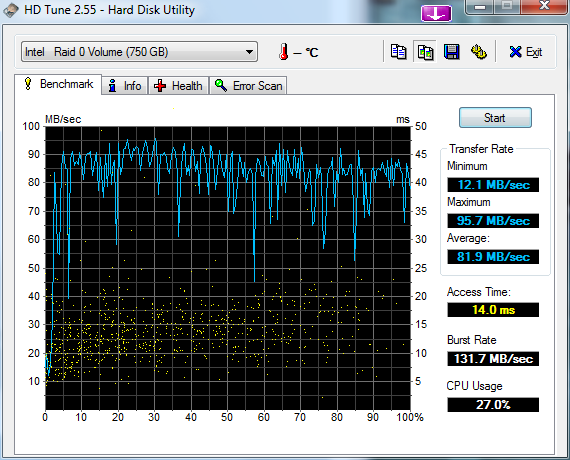

I’ve got some old 250Gb drives that are starting to show their age. I’ve currently got them setup in a 3x RAID 0 config which presents about 750Gb of space.

I’ve got everything on a single partition (meh, i’m lazy). I’ve done various speed tests in the current setup (with all space allocated), but i thought i’d re-image onto a short stroke partition.

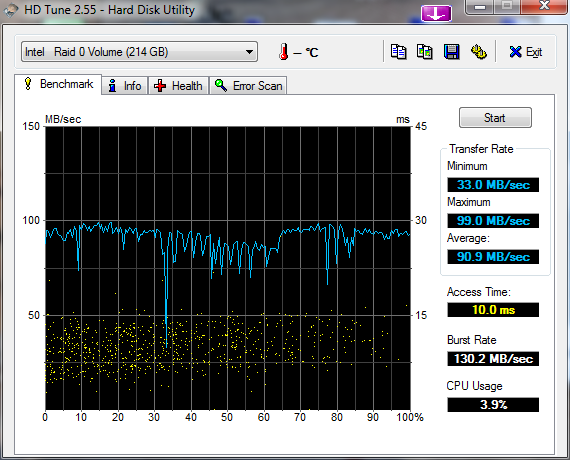

I only use about 150Gb of space on my main machine (most of my data is on another box), so i’m going to try creating a 200Gb partition to test if this provides any kind of performance boost.

So reducing my raid 0 from 750Gb to 214Gb, and here are the results…

Before with all 750Gb presented…

Same disks but short stroked to 214Gb….

Conclusion : Yip, seems like its worth it if you have the spare space. Average throughput is up by 10MB/s and seek has improved by almost a third loosing 4ms.

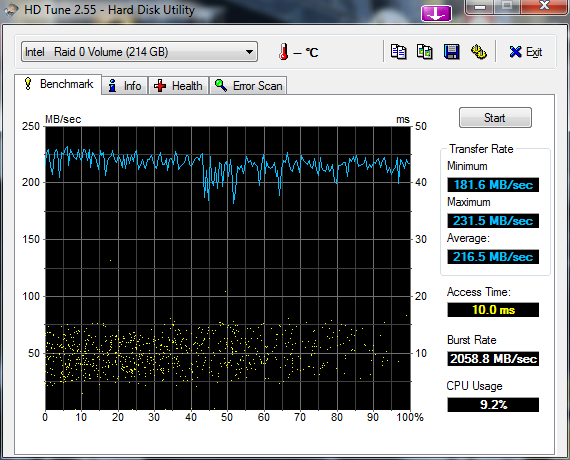

You will get even more of an improvement if you can use a smaller % of capacity per drive and / or more drives for your stripe.

Updated : 07/02/2010

btw – the above was without write-back cache enabled…. if i turned that on i got the following…